Machine learning ML Viva Questions with answer – sem 6 AI-DS/ML

Table of Contents

Module 1: Introduction to Machine Learning

Q1: What are the main issues in Machine Learning?

A1: The main issues in Machine Learning include overfitting, underfitting, bias-variance tradeoff, data scarcity, feature selection, and interpretability.

Q2: Explain the concept of Supervised Learning.

A2: Supervised Learning is a type of machine learning where the algorithm is trained on labeled data, and it learns to predict the output from the input data. It involves a mapping from input variables to output variables based on example input-output pairs.

Q3: Define the terms Classification and Clustering in the context of machine learning.

A3:

- Classification: Classification is a type of supervised learning where the goal is to categorize input data into predefined classes or categories based on their features.

- Clustering: Clustering is an unsupervised learning technique where the algorithm groups similar data points together into clusters based on the inherent structure of the data.

Q4: What are the steps involved in developing a Machine Learning application?

A4: The steps involved in developing a Machine Learning application typically include:

- Problem Definition

- Data Collection

- Data Preprocessing

- Feature Selection/Engineering

- Model Selection

- Training the Model

- Evaluating the Model

- Fine-tuning the Model

- Deployment

- Monitoring and Maintenance

Q5: Explain the terms Training, Testing, and Validation datasets in machine learning.

A5:

- Training Dataset: The training dataset is used to train the machine learning model. It consists of a set of input-output pairs used by the algorithm to learn the relationship between the input features and the output labels.

- Testing Dataset: The testing dataset is used to evaluate the performance of the trained model. It contains unseen data that the model has not been exposed to during training.

- Validation Dataset: The validation dataset is used to tune the hyperparameters of the model and avoid overfitting. It is separate from the training and testing datasets and helps assess the model’s generalization performance.

Q6: What is cross-validation in machine learning?

A6: Cross-validation is a technique used to assess the performance of a machine learning model. It involves partitioning the dataset into multiple subsets, training the model on a subset of the data, and evaluating it on the remaining subset. This process is repeated multiple times, with each subset used as both a training and testing dataset. Cross-validation helps to obtain a more reliable estimate of the model’s performance and generalization ability.

Q7: Explain the concepts of overfitting and underfitting in machine learning.

A7:

- Overfitting: Overfitting occurs when a machine learning model learns the training data too well, capturing noise or random fluctuations in the data instead of the underlying pattern. This leads to poor performance on unseen data.

- Underfitting: Underfitting occurs when a machine learning model is too simple to capture the underlying structure of the data. It fails to learn from the training data and performs poorly on both the training and testing datasets.

Q8: What are the performance measures used to evaluate the quality of a machine learning model?

A8: Performance measures used to evaluate the quality of a machine learning model include:

- Confusion Matrix

- Accuracy

- Recall

- Precision

- Specificity

- F1 Score

- Root Mean Square Error (RMSE)

Q9: Define the terms Recall, Precision, and Specificity in the context of performance evaluation.

A9:

- Recall (Sensitivity): Recall measures the ability of a classifier to correctly identify positive instances out of all actual positive instances. It is calculated as the ratio of true positives to the sum of true positives and false negatives.

- Precision: Precision measures the accuracy of positive predictions made by the classifier. It is calculated as the ratio of true positives to the sum of true positives and false positives.

- Specificity: Specificity measures the ability of a classifier to correctly identify negative instances out of all actual negative instances. It is calculated as the ratio of true negatives to the sum of true negatives and false positives.

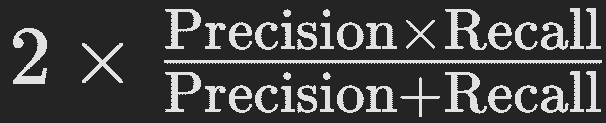

Q10: What is the F1 Score in machine learning, and why is it useful?

A10: The F1 Score is a measure of a model’s accuracy that considers both precision and recall. It is the harmonic mean of precision and recall and is calculated as

The F1 Score provides a balance between precision and recall, making it useful for evaluating models when there is an imbalance between the classes or when both false positives and false negatives are important.

Module 2: Mathematical Foundation for ML

Q1: What are the components of a System of Linear Equations?

A1: A System of Linear Equations consists of linear equations involving variables that are raised to the power of 1 and are related by addition or subtraction.

Q2: Define Norms and their significance in machine learning.

A2: Norms are mathematical functions that measure the “size” or “length” of vectors. In machine learning, norms are used to quantify the distance between vectors and to define regularization terms in optimization problems.

Q3: Explain the concept of Inner Products and their role in machine learning.

A3: Inner Products are a generalization of the dot product between vectors. In machine learning, inner products are used to measure the similarity between vectors and are fundamental in various algorithms such as support vector machines (SVMs) and kernel methods.

Q4: How is the length of a vector calculated, and what does it represent?

A4: The length of a vector, also known as its magnitude or norm, is calculated using a norm function such as the Euclidean norm. It represents the distance from the origin to the point represented by the vector in the vector space.

Q5: Define Orthogonal Vectors and explain their significance.

A5: Orthogonal vectors are vectors that are perpendicular to each other, meaning their inner product is zero. In machine learning, orthogonal vectors are used in various contexts, such as in principal component analysis (PCA) and orthogonal projection.

Q6: What are Symmetric Positive Definite Matrices, and why are they important in machine learning?

A6: Symmetric Positive Definite Matrices are square matrices that are symmetric (equal to their transpose) and have all positive eigenvalues. They are important in machine learning because they often arise in optimization problems, such as in the formulation of quadratic forms in objective functions.

Q7: Explain the concepts of Determinant and Trace of a matrix.

A7:

- Determinant: The determinant of a square matrix is a scalar value that represents the volume scaling factor of the transformation described by the matrix. It is used to determine whether a matrix is invertible.

- Trace: The trace of a matrix is the sum of its diagonal elements. It represents the sum of the eigenvalues of the matrix and is often used in optimization problems and to characterize the behavior of linear transformations.

Q8: Define Eigenvalues and Eigenvectors of a matrix and their significance in machine learning.

A8:

- Eigenvalues and Eigenvectors: Eigenvalues are scalar values that represent the scaling factor of the corresponding eigenvectors when a matrix is applied to them. Eigenvectors are non-zero vectors that remain in the same direction after the matrix transformation, only scaled by the corresponding eigenvalue.

- Significance: Eigenvalues and eigenvectors are important in machine learning for dimensionality reduction techniques like PCA and for understanding the behavior of linear transformations in various algorithms.

Q9: What are Orthogonal Projections, and how are they used in machine learning?

A9: Orthogonal Projections are transformations that map a vector onto a subspace such that the resulting projection is orthogonal to the subspace. In machine learning, orthogonal projections are used in dimensionality reduction techniques and in solving optimization problems.

Q10: Explain the concept of Singular Value Decomposition (SVD) and its applications in machine learning.

A10: Singular Value Decomposition (SVD) is a matrix factorization method that decomposes a matrix into three matrices: U, Σ, and V^T. It has various applications in machine learning, including dimensionality reduction, data compression, and collaborative filtering in recommendation systems.

Module 3: Linear Models

Q1: What is the least-squares method, and how is it used in linear regression?

A1: The least-squares method is a technique used to estimate the parameters of a linear model by minimizing the sum of the squared differences between the observed and predicted values. In linear regression, it is used to find the line (or hyperplane in higher dimensions) that best fits the given data points.

Q2: Explain Multivariate Linear Regression and its difference from simple linear regression.

A2: Multivariate Linear Regression is an extension of simple linear regression where multiple independent variables are used to predict the dependent variable. In simple linear regression, only one independent variable is used.

Q3: What is Regularized Regression, and why is it used in machine learning?

A3: Regularized Regression is a technique used to prevent overfitting in regression models by adding a penalty term to the loss function. It includes methods like Ridge Regression and Lasso Regression. Regularization is used in machine learning to improve the generalization performance of models by discouraging overly complex models.

Q4: How can Least-Squares Regression be used for classification tasks?

A4: Least-Squares Regression can be used for classification tasks by thresholding the predicted values. For example, in binary classification, if the predicted value is above a certain threshold, it is classified as one class, otherwise as the other class.

Q5: What are Support Vector Machines (SVMs), and what are their advantages in classification?

A5: Support Vector Machines (SVMs) are supervised learning models used for classification and regression analysis. They work by finding the hyperplane that best separates the classes in the feature space. The advantages of SVMs include their ability to handle high-dimensional data, their effectiveness in cases where the number of dimensions exceeds the number of samples, and their versatility in handling different types of data through the use of different kernel functions.

Q6: Explain the concept of a hyperplane in the context of Support Vector Machines.

A6: In the context of Support Vector Machines, a hyperplane is a decision boundary that separates the classes in the feature space. For binary classification, it is a (d-1)-dimensional subspace of the d-dimensional feature space. SVMs aim to find the hyperplane that maximizes the margin, i.e., the distance between the hyperplane and the nearest data points from each class.

Q7: What is the kernel trick in Support Vector Machines, and why is it useful?

A7: The kernel trick is a method used to implicitly map the input features into a higher-dimensional space without explicitly calculating the transformation. It allows SVMs to efficiently handle non-linear decision boundaries by computing the dot product in the higher-dimensional space using a kernel function. This makes SVMs versatile and powerful in capturing complex patterns in the data.

Q8: Explain the difference between hard margin and soft margin SVMs.

A8:

- Hard Margin SVM: Hard margin SVMs aim to find the maximum-margin hyperplane that perfectly separates the classes in the feature space. They are sensitive to outliers and may not perform well when the data is not linearly separable.

- Soft Margin SVM: Soft margin SVMs allow for some misclassification by introducing a penalty for points that fall within the margin or on the wrong side of the hyperplane. They are more robust to outliers and can handle data that is not perfectly separable.

Q9: What are the parameters involved in tuning a Support Vector Machine?

A9: The parameters involved in tuning a Support Vector Machine include the choice of kernel function, the regularization parameter C (for soft margin SVMs), and the kernel parameters (such as gamma for the RBF kernel). Additionally, the choice of optimization algorithm and its hyperparameters may also affect the performance of the SVM.

Q10: How does the choice of kernel function affect the performance of a Support Vector Machine?

A10: The choice of kernel function in a Support Vector Machine affects its ability to capture complex patterns in the data and the shape of the decision boundary. Different kernel functions are suitable for different types of data and may result in different classification performance. It is important to choose an appropriate kernel function based on the characteristics of the data and the problem at hand.

Module 4: Clustering

Q1: What is the Hebbian Learning rule, and how is it related to clustering?

A1: The Hebbian Learning rule is a neurobiological learning rule that states “neurons that fire together, wire together.” In the context of clustering, it can be used to update the connections between neurons (or nodes) in a neural network to learn the underlying structure of the input data. Specifically, it can be applied in self-organizing maps (SOMs) or neural gas algorithms to adjust the weights of neurons based on the similarity of input patterns.

Q2: Explain the Expectation-Maximization (EM) algorithm for clustering.

A2: The Expectation-Maximization (EM) algorithm is an iterative optimization algorithm used to find the maximum likelihood estimates of parameters in probabilistic models with latent variables, such as Gaussian Mixture Models (GMMs) for clustering. It consists of two main steps:

- Expectation (E) Step: Compute the expected value of the latent variables given the observed data and the current model parameters.

- Maximization (M) Step: Update the model parameters to maximize the likelihood of the observed data, using the expected values computed in the E-step.

These steps are iterated until convergence, where the parameters no longer change significantly.

Q3: How does the EM algorithm handle missing data in clustering?

A3: The EM algorithm handles missing data by computing the expected value of the latent variables (i.e., the cluster assignments) given the observed data and the current model parameters. In the E-step, the algorithm uses probabilistic inference to estimate the missing values, treating them as latent variables. This allows the EM algorithm to incorporate incomplete data into the clustering process and still converge to a maximum likelihood solution.

Q4: What are the advantages and disadvantages of the Expectation-Maximization algorithm for clustering?

A4:

- Advantages:

- It is a general-purpose algorithm that can handle various types of data and model distributions.

- It provides probabilistic cluster assignments, allowing for soft clustering and uncertainty estimation.

- It can handle missing data by incorporating latent variables into the model.

- Disadvantages:

- It can be computationally expensive, especially for large datasets and high-dimensional data.

- It is sensitive to the choice of initialization and may converge to local optima.

- It may require a large number of iterations to converge, especially if the data is poorly separated or the clusters are imbalanced.

Module 5: Classification Models

Q1: What are the fundamental concepts behind the evolution of Neural Networks, and how do they relate to biological neurons?

A1: The evolution of Neural Networks is inspired by the functioning of biological neurons in the brain. Biological neurons transmit signals through synapses to other neurons, where the strength of the connection (synaptic weight) determines the impact of the signal. Artificial Neural Networks (ANNs) mimic this process, where artificial neurons (nodes) receive inputs, apply weights to them, and produce an output using an activation function.

Q2: Explain the McCulloch-Pitts Model and its significance in the development of Artificial Neural Networks.

A2: The McCulloch-Pitts Model is a simplified mathematical model of a neuron proposed by Warren McCulloch and Walter Pitts in 1943. It describes how a binary output (firing or not firing) can be generated by a neuron based on the inputs it receives and predefined thresholds. While simple, this model laid the foundation for later developments in artificial neural networks and computational neuroscience.

Q3: How is a simple network designed, and what are the limitations of the Perceptron model?

A3: A simple network is designed by connecting multiple artificial neurons in layers, where each neuron receives inputs, applies weights, and passes the result through an activation function to produce an output. The limitations of the Perceptron model include its inability to handle non-linearly separable patterns and its sensitivity to the choice of initial weights.

Q4: What are Activation functions, and what types are commonly used in Artificial Neural Networks?

A4: Activation functions introduce non-linearities into the output of artificial neurons, allowing neural networks to model complex relationships in the data. Common types of activation functions include binary (step function), bipolar (sign function), continuous (sigmoid function), and ramp functions.

Q5: Explain the Perceptron Learning Rule and its significance in training a single-layer neural network.

A5: The Perceptron Learning Rule is an iterative algorithm used to adjust the weights of connections between neurons in a single-layer perceptron network. It updates the weights based on the error between the predicted and actual outputs, aiming to minimize this error and improve the network’s performance.

Q6: What is the Delta Learning Rule (LMS-Widrow Hoff), and how does it differ from the Perceptron Learning Rule?

A6: The Delta Learning Rule, also known as the LMS-Widrow Hoff rule, is a gradient-based algorithm used to adjust the weights of connections in neural networks. Unlike the Perceptron Learning Rule, which only works for binary classification and updates weights based on misclassifications, the Delta rule is more general and can be used for regression tasks. It adjusts weights based on the difference between the predicted and target values using the gradient of the error surface.

Q7: How is a Multi-layer perceptron network structured, and what is the process for adjusting weights of hidden layers?

A7: A Multi-layer perceptron (MLP) network consists of an input layer, one or more hidden layers, and an output layer. The weights between neurons are adjusted using the error backpropagation algorithm, which involves computing the error at the output layer and propagating it backward through the network to adjust the weights of hidden layers.

Q8: What is the Error backpropagation algorithm, and how does it work in training a neural network?

A8: The Error backpropagation algorithm is a gradient-based optimization algorithm used to train neural networks with multiple layers. It works by computing the error at the output layer and then propagating it backward through the network, adjusting the weights of connections at each layer to minimize the error. This process is repeated iteratively until convergence is achieved.

Q9: Explain Logistic regression and its relationship to neural networks.

A9: Logistic regression is a linear classification model used to model the probability of a binary outcome. It can be seen as a simplified version of a single-layer neural network with a sigmoid activation function. While logistic regression is limited to linear decision boundaries, neural networks can learn more complex relationships in the data through non-linear activation functions and multiple layers.

Q10: What are the advantages and disadvantages of logistic regression compared to neural networks?

A10:

- Advantages of logistic regression:

- Simple and interpretable model.

- Efficient computation and training.

- Well-suited for linearly separable data.

- Disadvantages of logistic regression:

- Limited capacity to capture complex patterns in the data.

- Cannot learn non-linear decision boundaries.

- Prone to underfitting if the data is not linearly separable.

Module 6: Dimensionality Reduction

Q1: What is the Curse of Dimensionality, and how does it impact machine learning algorithms?

A1: The Curse of Dimensionality refers to various phenomena that arise when working with high-dimensional data. It includes problems such as increased computational complexity, sparsity of data points, and the deterioration of distance-based metrics. These issues can negatively impact the performance of machine learning algorithms, leading to overfitting, increased generalization error, and decreased interpretability.

Q2: Differentiate between Feature Selection and Feature Extraction.

A2:

- Feature Selection: Feature Selection is the process of selecting a subset of relevant features from the original feature set. It aims to improve the performance of machine learning models by removing redundant or irrelevant features, reducing overfitting, and enhancing interpretability.

- Feature Extraction: Feature Extraction involves transforming the original features into a lower-dimensional space using mathematical techniques. It aims to capture the most important information while reducing the dimensionality of the data. Common methods include Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA).

Q3: What are Dimensionality Reduction Techniques, and why are they used in machine learning?

A3: Dimensionality Reduction Techniques are methods used to reduce the number of input features while preserving the most important information in the data. They are used in machine learning to address the Curse of Dimensionality, improve computational efficiency, and enhance model interpretability. Dimensionality reduction can also help mitigate overfitting and improve the generalization performance of models.

Q4: Explain Principal Component Analysis (PCA) and its applications in dimensionality reduction.

A4: Principal Component Analysis (PCA) is a linear dimensionality reduction technique used to transform the original features into a lower-dimensional space. It achieves this by finding the principal components, which are orthogonal vectors that capture the directions of maximum variance in the data. PCA is widely used in various applications, including data visualization, feature extraction, and noise reduction. It can help identify the underlying structure of the data and highlight the most important patterns.

Q5: How does PCA address the Curse of Dimensionality?

A5: PCA addresses the Curse of Dimensionality by reducing the dimensionality of the data while preserving most of its variance. By transforming the original features into a lower-dimensional space, PCA can help mitigate problems such as sparsity of data points and increased computational complexity associated with high-dimensional data. It also aids in visualization and interpretation of the data by capturing the most important patterns in a reduced space.

Q6: What is the process of applying PCA to a dataset, and how are the principal components determined?

A6:

- Process of applying PCA:

- Standardize the data (optional but recommended).

- Compute the covariance matrix of the standardized data.

- Perform eigendecomposition on the covariance matrix to obtain the eigenvectors and eigenvalues.

- Sort the eigenvalues in descending order and select the top k eigenvectors corresponding to the largest eigenvalues.

- Project the original data onto the selected eigenvectors to obtain the transformed dataset.

- Determining principal components:

- The principal components are the eigenvectors of the covariance matrix, which capture the directions of maximum variance in the data. They are determined through eigendecomposition, where the covariance matrix is decomposed into its eigenvectors and eigenvalues.

Q7: What are some limitations of PCA?

A7:

- Linearity: PCA assumes that the underlying relationships in the data are linear, which may not always hold true.

- Orthogonality: PCA seeks orthogonal principal components, which may not be the most meaningful representation for some datasets.

- Gaussian Assumption: PCA assumes that the data follows a Gaussian distribution, which may not be the case for all datasets.

- Loss of Interpretability: The principal components obtained through PCA may be difficult to interpret in terms of the original features, especially when dealing with high-dimensional data.

Q8: How do you choose the number of principal components to retain in PCA?

A8: The number of principal components to retain in PCA is typically determined based on the cumulative explained variance ratio. This ratio indicates the proportion of variance in the data that is explained by each principal component. A common approach is to retain enough principal components to capture a significant portion (e.g., 95%) of the total variance in the data.